Feedly launches strikebreaking as a service

The company claims to have not considered before launch whether their new protest and strike surveillance tool could be misused.

I've been an avid RSS user since high school, when I lovingly curated my feed of silly websites to read in Google Reader after school. Eventually, Google unceremoniously tossed Reader into their discarded products graveyard, and I had to find a new service to keep up with what had by adulthood turned into multiple feeds for news, my favorite blogs, and an expansive list of recipe websites that I consult when I want to cook something new. Later, RSS would become critical to how I keep up with what is happening in the crypto industry.

Based on the oldest emails I can find, I've been using Feedly since at least 2015. I immediately loved it. It was free, cleanly designed, browser based, and I could set it up to show me my feeds in simple chronological order. There was an Android app so I could browse my feeds on the train. For some websites that I found illegible due to their design choices or overzealous advertising, I liked that Feedly's in-product reader reformatted articles in a very comfortable and readable way.

Feedly eventually joined the short list of software where I signed up for a paid subscription despite not needing any of the paid features, but just because I got so much value from it and wanted to support the company. I happily recommended it to anyone looking for a good feed reader.

That made it all the more disappointing when on Thursday I saw that they were advertising a new feature:

Now, the "AI" stuff wasn't new. Feedly has been boasting about its AI-driven content discovery tools, later anthropomorphized as a cheerful little robot named "Leo", since 2019.

Several of us who've used the tool for a while have discussed how it seemed that Feedly was paying more and more attention to its Enterprise users, advertising tools that were highly corporate and unappealing to me and my food blogs. It felt like every month or two I'd get a pop-up informing me I could "Track competitors and emerging trends" or "Track the influence of the largest US companies". They also took a clear turn towards courting the cybersecurity industry, with somewhat military-sounding blog posts about how to "Track emerging threats" or set up a "Feedly for Threat Intelligence" account.

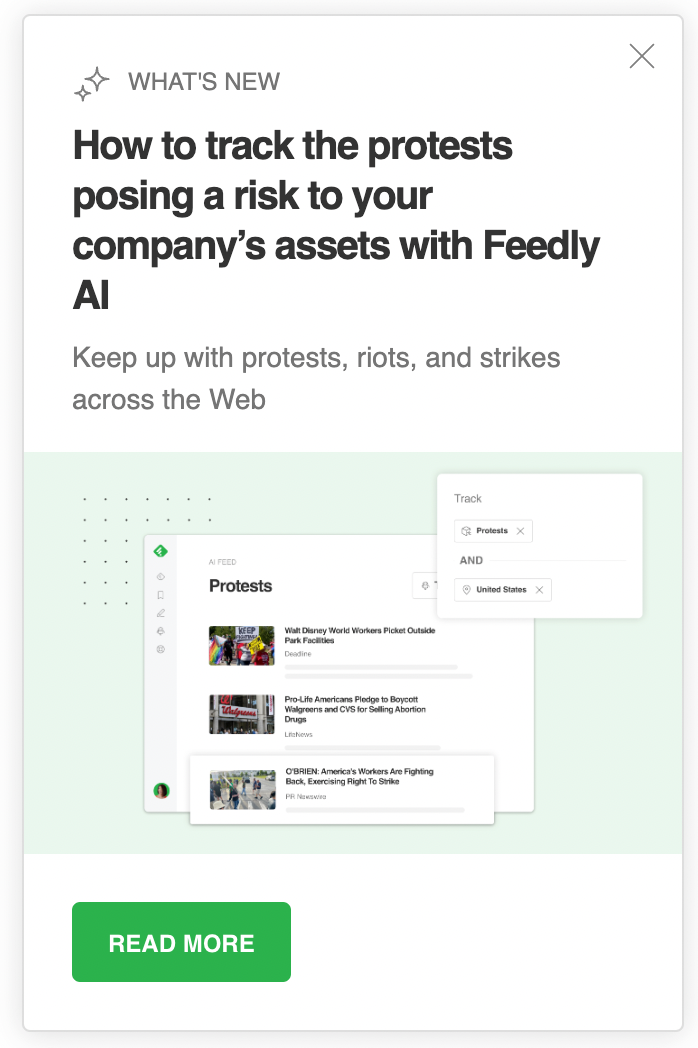

The new development on Thursday, though, was the marketing that Feedly could now help me "track protests posing a risk to [my] company's assets". The blog post went on:

Today, we are excited to release two new Feedly AI models: Protests and Violent Protests.

They help security analysts track riots, strikes, and rallies that pose a risk to a company's assets and employees.

Yikes.

In a world of widespread, suspicionless surveillance of protests by law enforcement and other government entities, and of massive corporate union-busting and suppression of worker organizing, Feedly decided they should build a tool for the corporations, cops, and unionbusters. Protests, Feedly seems to say, are something that us customers should constantly fear will turn violent and therefore surveil in order to protect our "assets" — not something we might want to, oh I don't know, support or participate in.

When I expressed my disappointment on Twitter and canceled both my paid subscription and my account, Feedly responded with the same message they'd copied-and-pasted to several others:

Sorry for the disappointment, Molly. The blog post should have been clearer. This is used to notify employees of a potential security risk if they travel or work at locations where violence emerges. The content was misleading so deleted.

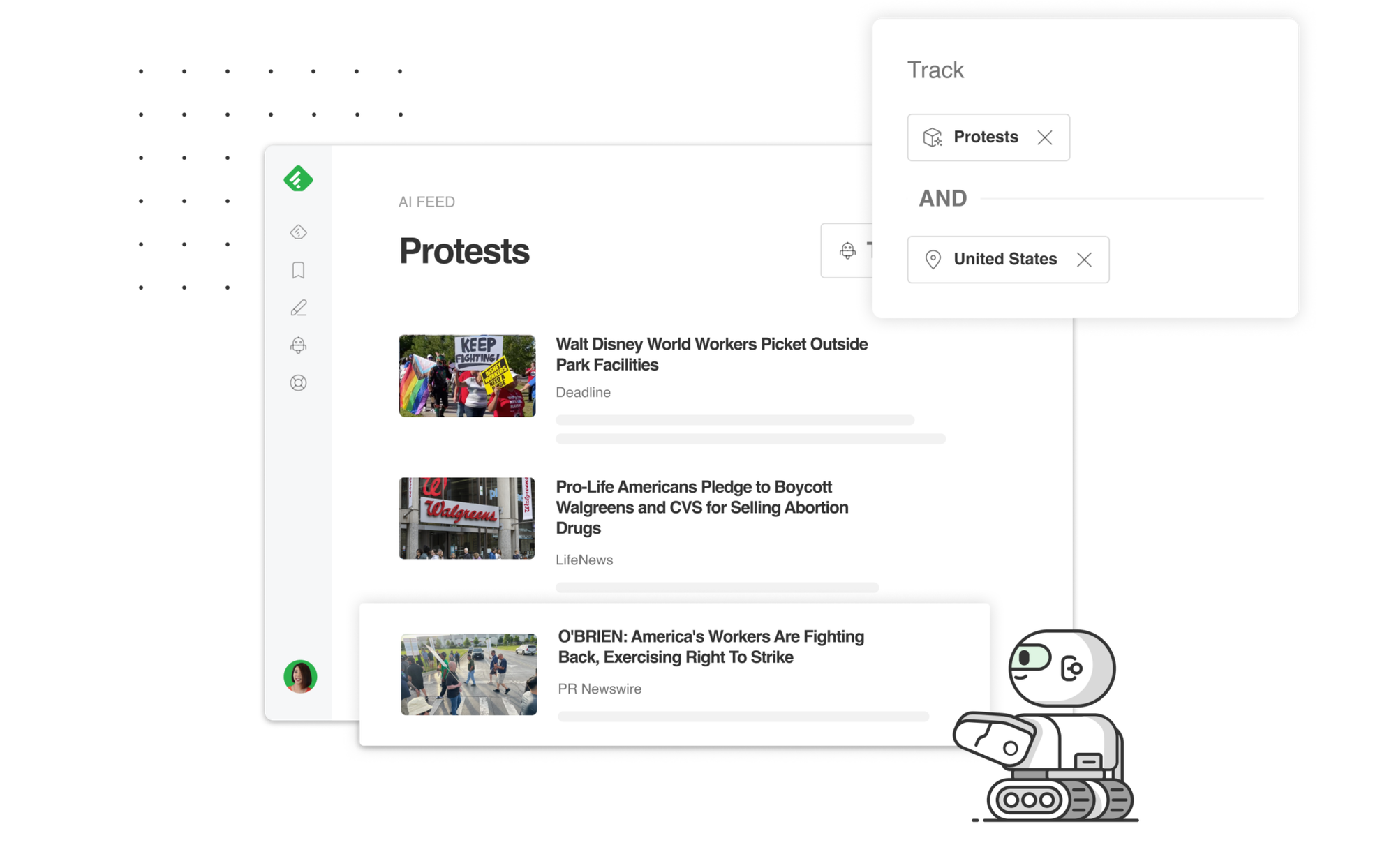

Ultimately they deleted the blog post announcement (though it is preserved on Internet Archive). They repeated the same sentiments as in the tweet in an extremely friendly and shamefully uncritical article in PC Mag, where Feedly CEO Edwin Khodabakchian claimed it was all just a big misunderstanding: "People thought it was being used differently, and it's hard to explain that on Twitter in just a few words." Ultimately, Khodabakchian himself replied to my tweets to try to explain further:

Feedly claims they didn't properly communicate how the tool was meant to be used

On the contrary, it seems to me that Feedly did a fine job of communicating that the tool was intended to "track riots, strikes, and rallies that pose a risk to a company's assets and employees".

In the reply to my tweet, the Feedly Twitter account claimed that "This is used to notify employees of a potential security risk if they travel or work at locations where violence emerges". In the PC Mag article, they talk about how "the AI tool was never designed to help companies silence legitimate protests". (How Feedly's AI would determine what is a "legitimate protest" is not addressed.)

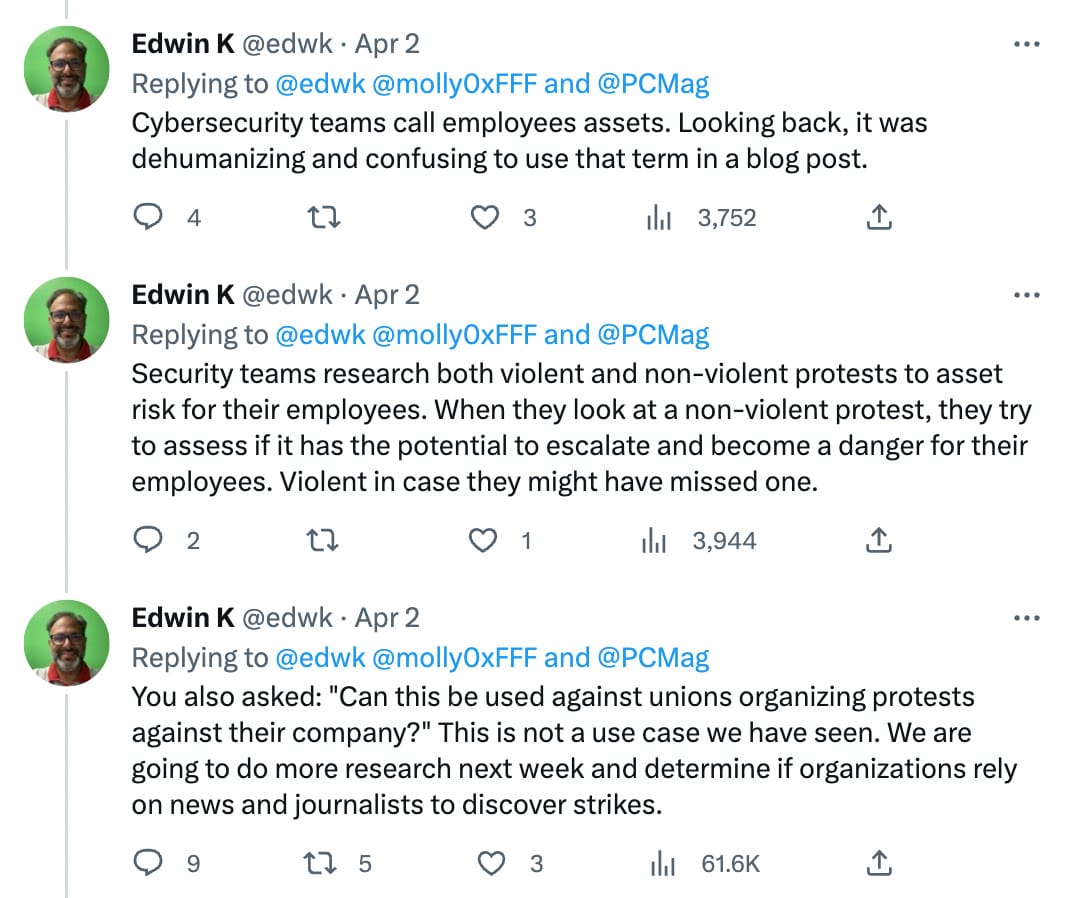

But the images from the blog post tell a different story, boasting how their model can identify relevant snippets that would signify "risky protests", such as:

- "Over 250,000 people took part in demonstrations against pension reform"

- "They urged everyone to join the march"

- "Britain's railways face paralysis as unions resume strikes".

Doesn't sound that "risky" to me.

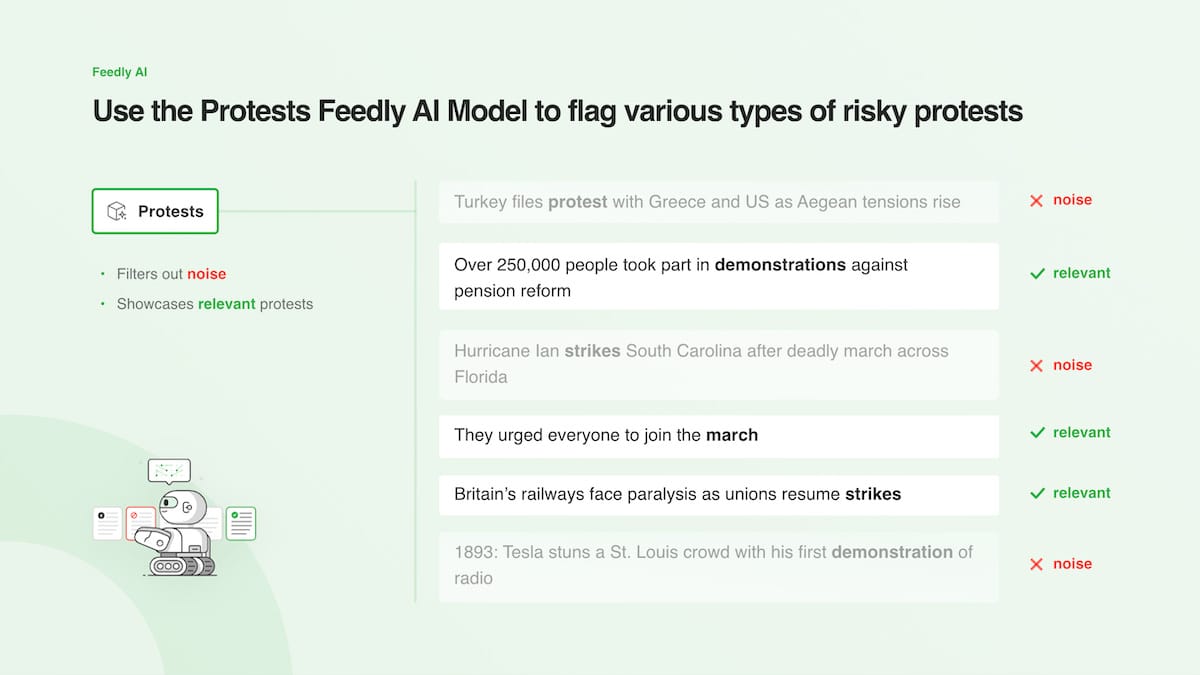

The feature image in the blog post also showed the tool identifying three specific stories pertaining to "protests" and "United States": a news story about a union strike at Disney World, an advocacy group article about a customer boycott of Walgreens,a and a Teamsters press release about an increasing number of worker strikes in the US. Again, nothing "risky".

Feedly's CEO also claimed that "cybersecurity teams call employees assets" (acknowledging that that was dehumanizing language, which it is) to try to sidestep some of the criticism in which people noted that it "sounds like your tool cares less about the journalist and more about their laptop". This explanation seems hard to believe given the redundancy that would seem to introduce when they referred to "a company's assets and employees".

Feedly claims they did not consider that the tool could be misused against protesters and striking workers

One of the very first things I wrote when I began to be more publicly outspoken about the technology industry was: "In order to responsibly develop new technologies, it is critical to ask 'how will this be used for evil?'"

Feedly has claimed that they didn't even consider the possibility that a tool to track protests and strikes could be misused. It would be startling if that was true, but it stretches credibility that a company clearly targeting the cybersecurity industry might not consider that software can be misused.

Now, instead of decommissioning the tool to go back and do the absolute bare minimum of thinking around its impacts, Feedly has been promising on Twitter that they will do a week of research and then make a decision.

Here, Khodabakchian mischaracterizes my tweets as though I asked if the tool could be used against unions. In reality I think I was pretty clear in stating that it clearly could. Khodabakchian seems to suggest in his tweet that as long as they can't find evidence that someone is actively using the tool to unionbust, Feedly is in the clear. The reply from the Feedly account is a little broader, though I would love to know more about how Feedly plans to prove that their model "can be used in harmful ways".

It seems like we may be leading up to a "we investigated ourselves and we found we did nothing wrong" situation, but I suppose time will tell.

I checked back on this post a few months later. The model is still alive and well.

I don't think it's likely that Feedly's so-called "AI" news aggregator technology is gamechanging as far as corporate (or certainly governmental) surveillance tech. The experiments I've done with their tools that try to go beyond pure RSS have been pretty unimpressive: for example, months ago when I tried to filter a news feed for "crypto", it informed me it would start looking for content about methamphetamines (because apparently "crypto" is some kind of obscure slang for meth — who knew!) Nick Rycar dug even further into the advertising around the newer model, observing that Feedly's own promotional images show it erroneously characterizing an article from Medicaid.gov about Medicaid project proposals as "violent protests", and surfacing results about Pakistan and Colombia when trying to filter for protests in China.

But I also don't think that a company that creates harmful technology should be excused simply because they're bad at it.

As much as there might be teams who want to protect employees by scouring the news for signals that their journalists could be in danger, there are also teams who want to find and suppress employee speech, labor organizing, protest movements, and worker solidarity.

At the bare minimum, companies need to carefully consider how the tools they develop could be misused, and take clear and strong proactive steps to prevent that misuse before the tool is released.

Footnotes

Adding to the weirdness of all this is that Feedly's feature image advertised how Feedly would surface results from LifeNews, an anti-abortion advocacy site that was included in NewsGuard's 2021 "Ten Most Influential Misinformers" list for publishing false claims about abortion safety and COVID-19. ↩